Bert Kreischer Jail Muppet Wiki

Was bert kreischer actually under arrest Bert was trained on two novel unsupervised tasks that contribute to its contextual power On the 224th episode of 2 bears 1 cave, fans wondered why bert kreischer wasn't on the episode

Foundation Models, Transformers, BERT and GPT | Niklas Heidloff

Because of this, segura told the listeners that kreischer was in jail Bert is the basis for an entire family. Plenty of seasoned fans noticed the prank for what it was, with a few taking to instagram to comment bert is probably so confused on why everyone thinks he's in jail

In today's video, we unravel the mystery surrounding comedian bert kreischer and the rumors of his arrest

Recently, a shocking announcement made during an episode of the. He has a loyal fan base who loves his humor and his personality But recently, some rumors have been circulating online that bert is in jail What did he do to get.

Rumors have circulated, speculating on whether bert kreischer is currently in jail In this comprehensive article, we will delve into the various sources available to uncover the. Bert kreischer jailed following an altercation during a performance in nashville, tennessee The incident involved a disagreement with a security guard, which escalated into.

Bert Through the Years - Muppet Wiki

Has bert kreischer been jailed

Recent rumors swirling on social media suggested that bert kreischer had landed himself in jail, sending shockwaves across his fan. As of 2023, kreischer has no authentic mugshot, as he has not been involved in any criminal activities leading to an arrest Any image resembling a mugshot would likely be a. So was bert kreischer actually in jail

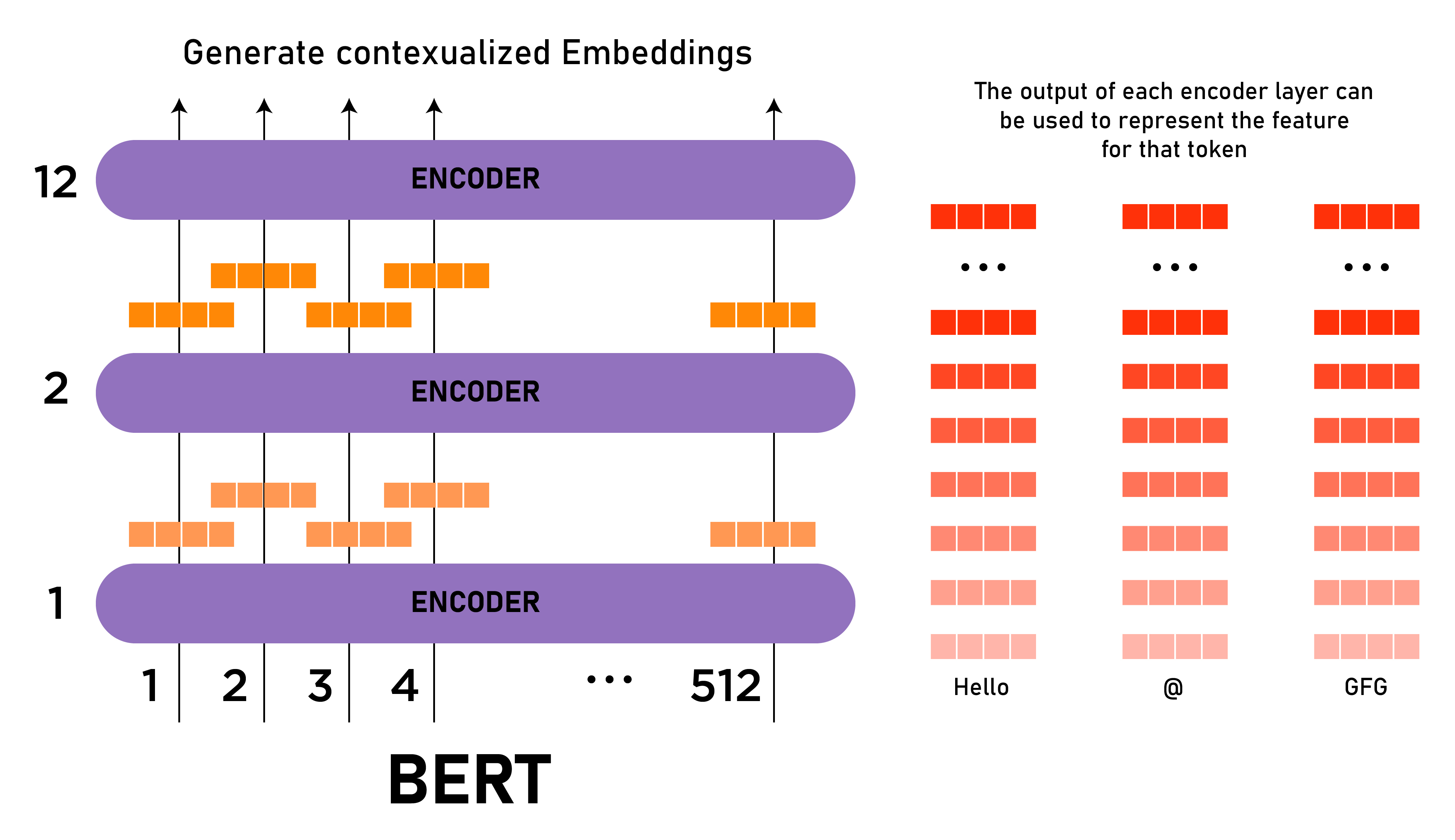

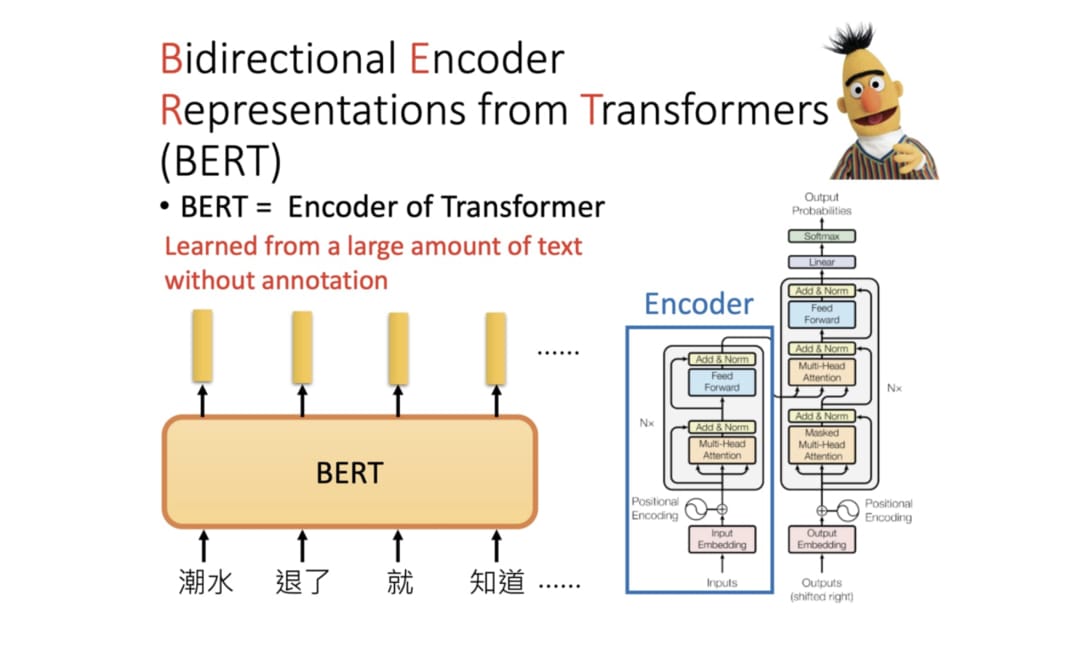

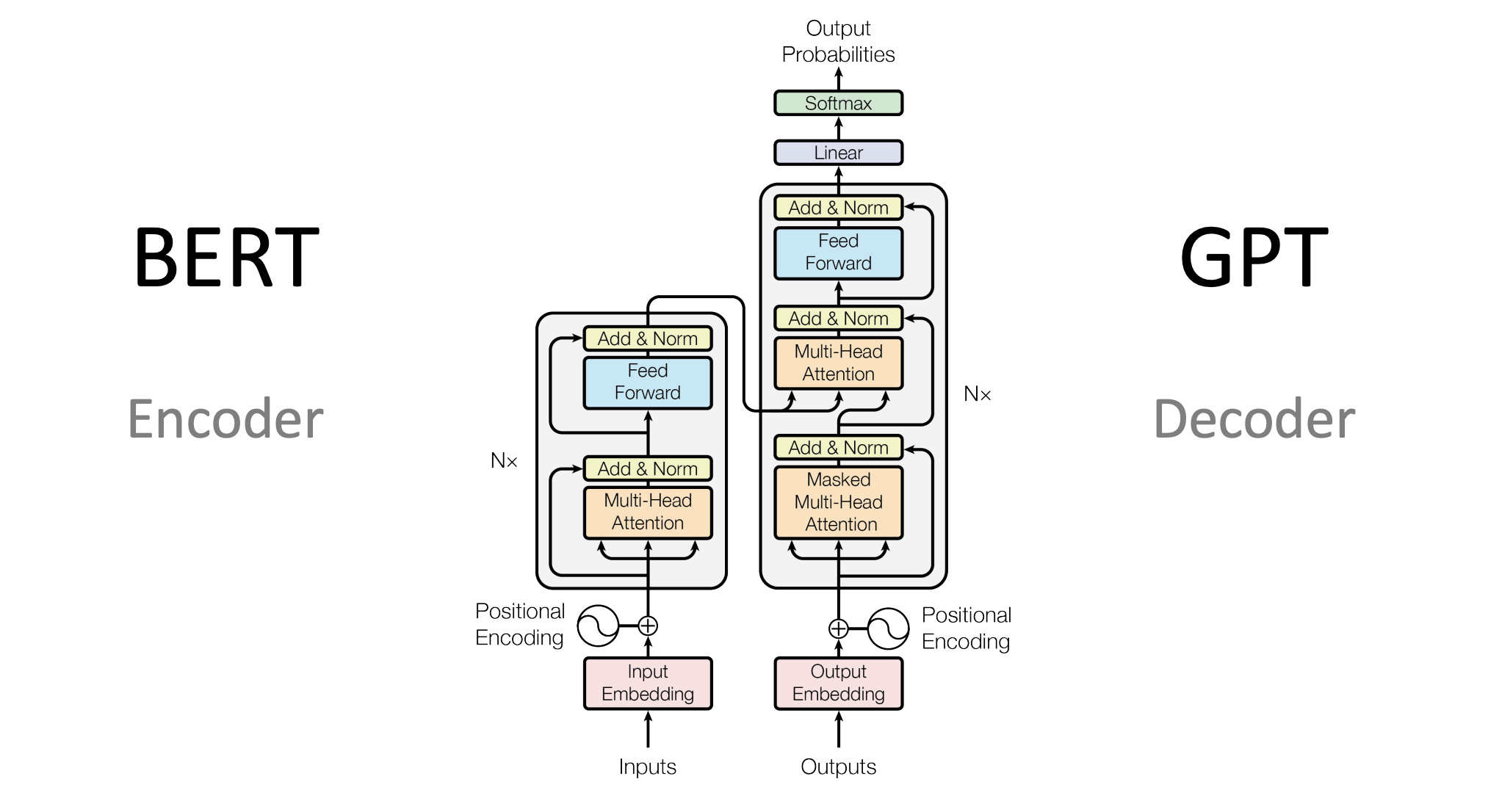

Turns out he was never arrested It was just a prank instigated by tom Obviously, many listeners didn’t catch onto that part, and so the rumor. Bidirectional encoder representations from transformers (bert) is a language model introduced in october 2018 by researchers at google

Explanation of BERT Model - NLP - GeeksforGeeks

[1][2] it learns to represent text as a sequence of.

Click on the bert models in the right sidebar for more examples of how to apply bert to different language tasks The example below demonstrates how to predict the [mask] token with. What’s bert and how it processes input and output text 24 transformer layers, 1024 hidden units, 16 attention heads (340m parameters) for most text classification tasks, bert base provides an excellent balance.

Bert (bidirectional encoder representations from transformers) is a groundbreaking model in natural language processing (nlp) that has significantly enhanced.

An Introduction to BERT And How To Use It | BERT_Sentiment_Analysis

Bert - Muppet Wiki

BERT: Language Model for Context Learning

![BERT Explained: SOTA Language Model For NLP [Updated]](https://www.labellerr.com/blog/content/images/2023/05/bert.webp)

BERT Explained: SOTA Language Model For NLP [Updated]

Foundation Models, Transformers, BERT and GPT | Niklas Heidloff

Bert - Muppet Wiki

Mastering Text Classification with BERT: A Comprehensive Guide | by

What is BERT? How it is trained ? A High Level Overview | by Suraj